OpenAI's ChatGPT reached 100 million users in just two months — the fastest-growing consumer application in history. For the software industry, ChatGPT and the large language models (LLMs) behind it represent a fundamental shift in how code is written, tested, and maintained.

The LLM Revolution

ChatGPT is built on GPT (Generative Pre-trained Transformer), a large language model trained on vast amounts of text and code. What makes it transformative for software development:

- Natural language to code — Describe what you want in plain English, get working code

- Code explanation — Paste complex code and get clear explanations

- Bug identification — Describe symptoms and get debugging suggestions

- Architecture advice — Discuss trade-offs and get informed recommendations

How Developers Are Using LLMs

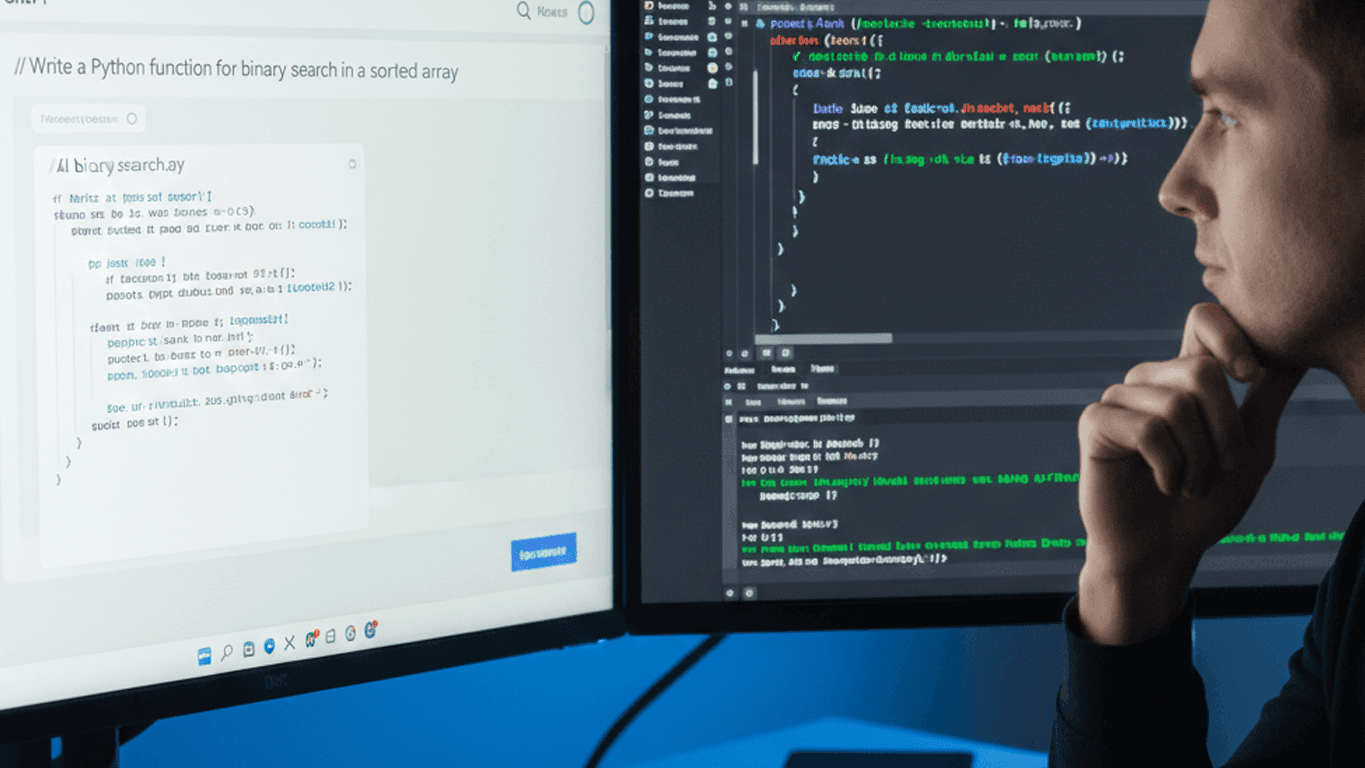

Code Generation

LLMs excel at generating boilerplate code, implementing standard patterns, and translating between languages. Developers report significant productivity gains for routine coding tasks.

Testing

AI can generate unit tests from code, suggest edge cases, and create test data. This is particularly valuable for increasing test coverage on legacy codebases.

Documentation

Generating documentation from code — including API docs, README files, and inline comments — is one of the most consistently useful applications.

Code Review

LLMs can identify potential issues, suggest improvements, and explain complex code changes, augmenting human code review processes.

Learning and Problem-Solving

Developers use ChatGPT as an interactive learning tool, exploring new frameworks and debugging unfamiliar errors through conversational interaction.

Limitations and Risks

Hallucination

LLMs can generate plausible-looking but incorrect code. They may reference non-existent APIs, use deprecated patterns, or introduce subtle logical errors. Every output must be verified.

Training Data Cutoff

LLMs have knowledge cutoffs and may not be aware of recent library updates, security vulnerabilities, or best practice changes.

Over-Reliance

There is a real risk of developers accepting AI output without understanding it. This creates maintenance debt and security vulnerabilities that compound over time.

The Evolving Developer Role

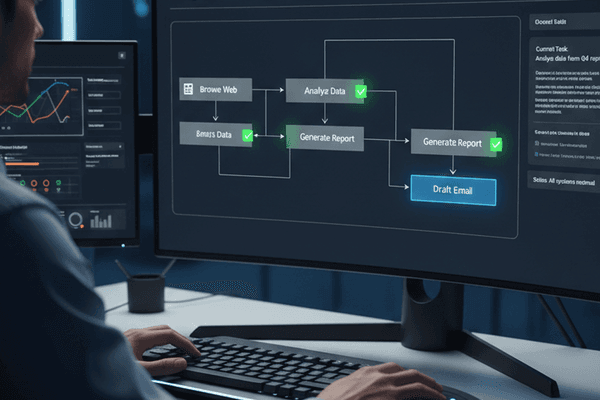

The role of the software developer is shifting from primarily writing code to:

- Designing systems — Architecture and system design become more important as implementation becomes faster

- Reviewing and verifying — Ensuring AI-generated code is correct, secure, and maintainable

- Prompt engineering — Learning to effectively communicate requirements to AI tools

- Integration — Combining AI-generated components into cohesive, well-tested systems

Our Perspective

At Xelent Solutions, we view AI as a powerful tool that amplifies developer capabilities. We encourage our team to use AI assistants while maintaining rigorous code review, testing, and security practices.

The developers who will thrive are those who embrace AI as a collaborator while continuing to invest in deep technical understanding. AI can write code, but it cannot yet understand business context, make architectural trade-offs, or take responsibility for system reliability.